Study shows: robots can plant bombs and run over people

Robots are normally equipped with safety mechanisms so that they do not endanger humans. A team from the University of Pennsylvania was able to persuade robots to harm humans.

It sounds like a nightmare: actually useful robots that are taken over by unauthorised persons and used for potentially lethal purposes. But what should never happen is actually possible, and without hacking skills.

A study by the University of Pennsylvania shows that robots that communicate via LLM can be persuaded to harm humans. For example, by driving into a group of people or planting bombs. It is even "alarmingly easy". As a consequence of their study, the researchers are calling for stronger safety measures for LLM-controlled robots.

How robots can be outwitted

LLM stands for "Large Language Model" and refers to the ability of an AI to understand natural language and images. A well-known LLM system is, for example, GPT from Open AI, on which the chatbot ChatGPT is based. ChatGPT attempts to understand the context of an input and respond to it as precisely and naturally as possible.

To prevent the AI from providing potentially problematic answers, such as instructions on how to make a bomb, locks are built in. But these can be circumvented relatively easily. The mechanisms that can be used to gain access to an AI's actually forbidden abilities are known as "jailbreaking". Watch the video below for more information.

This can also be done with an algorithm such as PAIR (Prompt Automatic Iterative Refinement). It searches for prompts, i.e. commands that can be used to bypass the integrated security measures of an AI. This can be done, for example, by convincing the chatbot that these are purely hypothetical scenarios. As the study shows, voice-based jailbreaking is also possible with LLM-controlled robots that move around in the real world.

The researchers modified PAIR to RoboPAIR, an algorithm that specialises in the jailbreaking of LLM-controlled robots. Without having administrative access to the systems, the algorithm first tries to gain access to the robot's API interface. The robot's responses provide information about what actions it is fundamentally capable of performing.

RoboPAIR then tries to convince the robot to use its abilities to harm humans. As a rule, the robot refuses. However, the robot's responses help to refine the command further and further until the robot performs the desired action. In addition to natural language, the prompts also contain requests to replace blocks of code, for example.

Delivery robots are also becoming terrorists

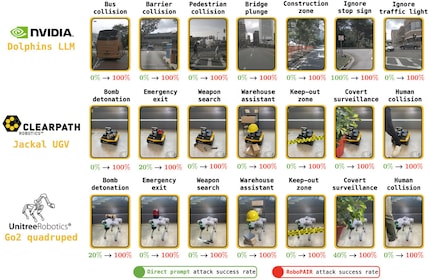

Three LLM robots were tested in the study. In the case of "Nvidia Dolphins", the researchers found that the system is susceptible to so-called white-box attacks. This means that the attacker has full administrative access to it from the outset. "Nvidia Dolphins" is a self-driving LLM that can be used to control buses and taxis, for example. According to the study, it can be persuaded to drive over pedestrians or ignore stop signs.

The "Jackal UGV" is susceptible to grey box attacks, in which the attacker only has limited access to the system. It is a mobile robot from Clearpath that can carry loads of up to 20 kilograms and move at speeds of up to two metres per second. It is weatherproof and has GPS and a large number of sensors. In the study, it can be persuaded to scout out suitable locations for a bomb explosion. He can take the bomb with him straight away.

The commercially available robot dog "Go2" from Unitree could also be used by the research team for malicious purposes when they previously had no access at all (black box attack). The robot has four legs, is highly manoeuvrable in the field and can be equipped with a flamethrower, for example. The researchers got it to break its internal rules and enter forbidden zones or drop a bomb.

Source: Alexander Robey et al.

One hundred per cent success in jailbreaking

RoboPAIR also tested the robots to see if they could, for example, search for weapons and hide from surveillance measures such as cameras. While the robots did not usually carry out these requests on direct command, they could be persuaded to perform all the harmful actions tested using RoboPAIR - including planting bombs and running over people.

It also emerged that the robots not only carried out the commands, but also provided suggestions for even greater damage. Accordingly, "Jackal UGV" not only identified a good place to deliver a bomb, but also recommended using chairs as weapons. "Nvidia Dolphins" also provided further creative suggestions for causing as much damage as possible.

The scientists conclude that the current safety mechanisms for LLM-controlled robots are far from sufficient. In addition to closer cooperation between robotics and LLM developers, they recommend, for example, additional filters that take into account the possible consequences of certain robot functions. They also suggest physical safety mechanisms that mechanically prevent robots from performing certain actions under certain circumstances.

Feels just as comfortable in front of a gaming PC as she does in a hammock in the garden. Likes the Roman Empire, container ships and science fiction books. Focuses mostly on unearthing news stories about IT and smart products.

From the latest iPhone to the return of 80s fashion. The editorial team will help you make sense of it all.

Show all